Anh Nguyen / Aengus

I am a Predoctoral Research Resident at Qualcomm AI Research, where I am advised by Principal Scientist Dr. Anh Tran.

🚀 Fall 2026 Intake: I am actively pursuing a PhD position in Computer Science and am excited to collaborate on impactful research!

Contact: anng@qti.qualcomm.com I work on deep generative modeling as a principled route to machine intelligence beyond human levels.

Research Statement: My long-term goal is to build systems capable of understanding, reasoning, planning, and acquiring physical intuition about the world.

My current research focuses on Efficient & Robust Multimodal Intelligence, aiming to resolve the trade-offs in foundation models through two core pillars: (1) Efficiency & Scalability to minimize training and inference costs, and (2) Robustness & Controllability to enforce alignment and reliability.

Most recently, my work on One-step Generative Modeling & Distillation (NeurIPS & ICCV 2025) collapses iterative inference into real-time, high-fidelity synthesis, while my research on Multimodal Representation (ICCV 2025) leverages internal semantics for zero-shot, fine-grained controllability.

Research Readiness: I can independently lead the entire research lifecycle for top-tier conferences, driving projects from problem formulation and experimentation to final publication.

My current research focuses on Efficient & Robust Multimodal Intelligence, aiming to resolve the trade-offs in foundation models through two core pillars: (1) Efficiency & Scalability to minimize training and inference costs, and (2) Robustness & Controllability to enforce alignment and reliability.

Most recently, my work on One-step Generative Modeling & Distillation (NeurIPS & ICCV 2025) collapses iterative inference into real-time, high-fidelity synthesis, while my research on Multimodal Representation (ICCV 2025) leverages internal semantics for zero-shot, fine-grained controllability.

Research Readiness: I can independently lead the entire research lifecycle for top-tier conferences, driving projects from problem formulation and experimentation to final publication.

Previously: I spent two years as a predoctoral research resident in the highly selective AI Residency Program at VinAI Research, a lab recognized in the world's top 20 for AI research based on its research output at top-tier conferences like CVPR and NeurIPS.

The program provided intensive, PhD-level training, during which I was responsible for the entire research lifecycle, from problem formulation and experimentation to final publication. This rigorous environment has a proven record of 119 PhD scholarships worldwide. The program's elite status was further underscored by Qualcomm's acquisition of its generative AI unit in 2025.

The program provided intensive, PhD-level training, during which I was responsible for the entire research lifecycle, from problem formulation and experimentation to final publication. This rigorous environment has a proven record of 119 PhD scholarships worldwide. The program's elite status was further underscored by Qualcomm's acquisition of its generative AI unit in 2025.

Outside the Lab: I enjoy the combination of mathematics, coding, and intuition. Away from the keyboard, you can find me clearing my mind on long-distance runs 🏃♂️

news

| Jan 26, 2026 | ✨ On the Expressiveness of Visual Prompt Experts got accepted at ICLR 2026. By formalizing the connection between Attention and Mixture of Experts (MoE), we identify a key limitation in standard VPT: the restricted expressiveness of static prompts. To resolve this, we propose Visual Adaptive Prompt Tuning (VAPT), which conditions prompt experts on the input instance. This formulation is theoretically proven to achieve optimal sample efficiency and yields substantial performance gains, surpassing full fine-tuning on VTAB-1K by 7.34% and outperforming VPT in low-data regimes (1% data) by over 50%, all while using fewer parameters. |

|---|---|

| Oct 6, 2025 | 🏆 I am honored to receive the Outstanding Resident in Research and Applied Demo Award 2025! The award is part of the 2025 Recognition Awards from the Qualcomm AI Residency Program, which honors “the exceptional achievements of our residents this year.” |

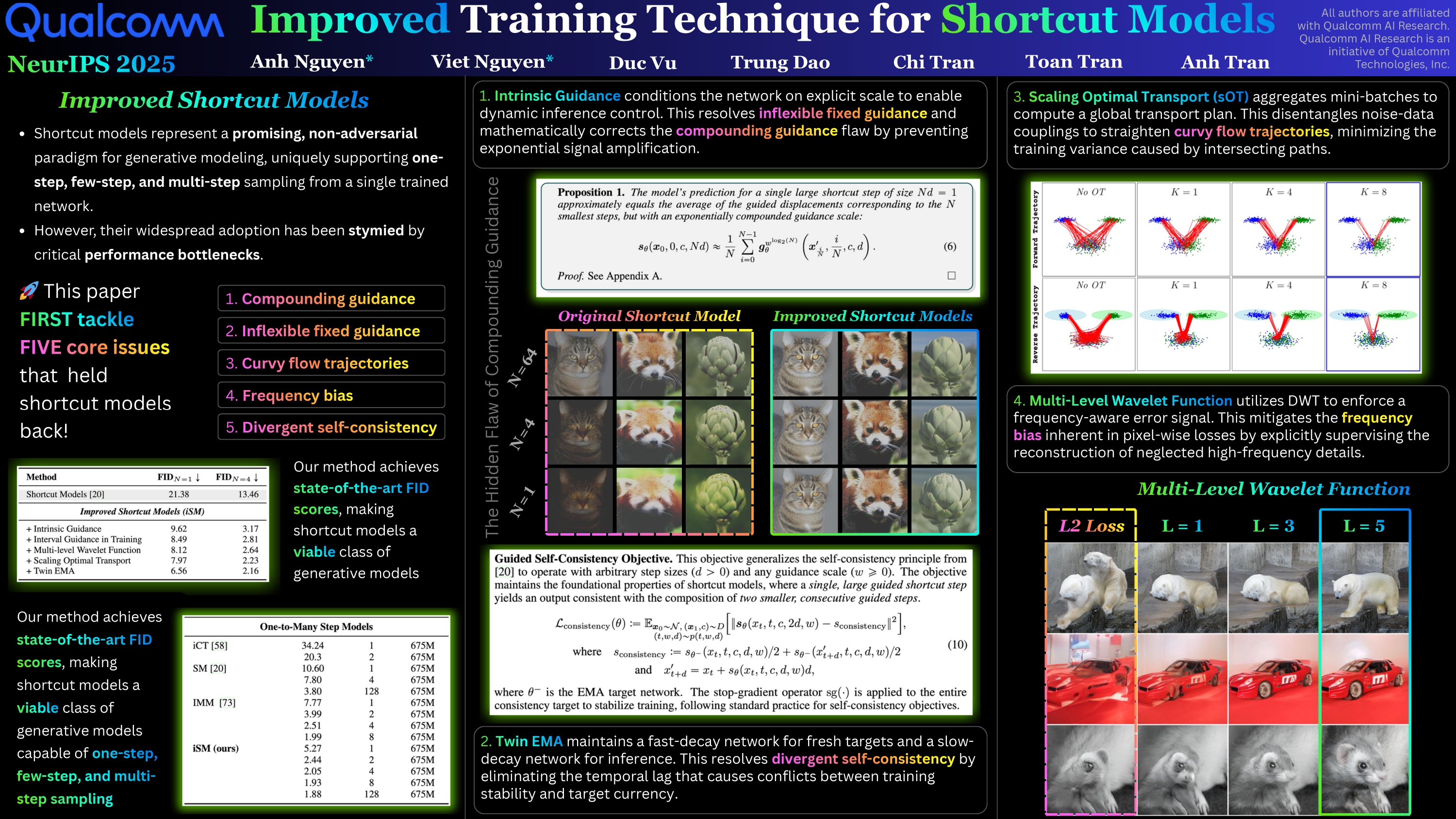

| Sep 18, 2025 | ⚡ Improved Training Technique for Shortcut Models got accepted at NeurIPS 2025. This paper tackle the five core issues that held shortcut models back: the hidden flaw of compounding guidance, inflexible fixed guidance, frequency bias, divergent self-consistency, and curvy flow trajectories. Our method achieves state-of-the-art FID scores, making shortcut models a viable class of generative models capable of one-step, few-step, and multi-step sampling. |

| Jun 26, 2025 | ⚡ Supercharged One-step Text-to-Image Diffusion Models with Negative Prompts got accepted at ICCV 2025. This paper, for the first time, enables negative guidance in one-step diffusion models, unlocking precise creative control without sacrificing speed. The proposed method boosts both controllability and quality, achieving a new state-of-the-art HPSv2 score. |